November 4, 2014

"Earth as a Simulation Series 2: Are we simulated copies of people? How, slowing down technological development in your simulation will get around the potential recursive building sims in a sim glitch problems. However, an accurately simulated population will STILL present specific experiences, despite that the technologies these experiences depend on DON'T YET exist (immersive VR experiences for example). This series presents evidence of anomalous 'missing technology' experiences & evidence of obscuration of these & evidence that the simulation we are in was built in the last few decades."

If our hypothetical earth simulation project does work in a similar way such that it uses similar techniques and strategies that we already use now to reduce processing cycles and to save on processing intensive 3D frame rendering computations (which you’d expect because it would be the outcome of what we are using now) then might there be observable side effects of it doing this that are detectable by ourselves?

It shouldn’t take much to extend your thinking beyond what was presented on the previous page to realize that our simulation software simple absolutely HAS to keep track of and to calculate and define all interactions in moment by moment detail of absolutely everything here IN ADVANCE OF ABSOLUTELY ANYTHING HAPPENING. In other words, because it would be simulating copied people ACCURATELY it would be forced to accurately calculate everything well BEFORE ANYTHING VISIBLE ACTUALLY HAPPENS HERE.

In having to have everything pre-calculated then it is seriously likely to use a whole range of processing and complex calculation reduction strategies. If this ‘IS’ what it does then it is actually highly likely that the software will be pre-generating a buffer (a cache) of already defined and pre-rendered frames of each simulated person and their viewable environment.

Depending on the ‘state’ of people and their immediate future circumstances this pre-buffering might actually hold many seconds of pre defined ‘ready to render’ frames.

On the previous page I also made it clear that it is very likely in situations where people are attracted to each other that the simulation is pre-defining these peoples feelings and emotional reactions when they are SIMULATED as being with each other.

In these circumstances, ‘IF’ the simulation is pre-rendering frames AND these frames include pre-defined emotional responses then is it possible that we might be able to detect and measure any bleed through of the pre-rendered frames holding the future emotional responses before the emotional responses themselves are actually experienced?

It would be easy to monitor people to see if you could measure very subtle body changes to try and detect if people at least sub-consciously could show that ‘something’ of them is aware of a future emotional reaction of a particular type?

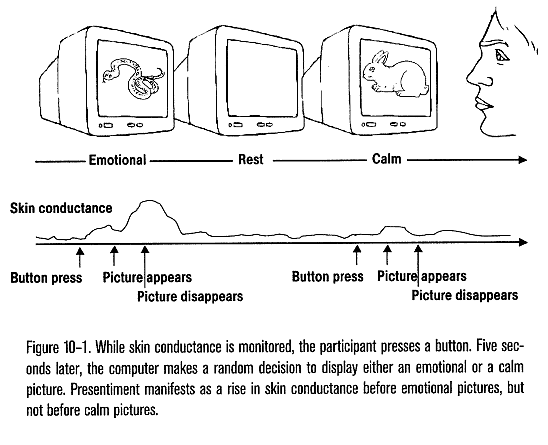

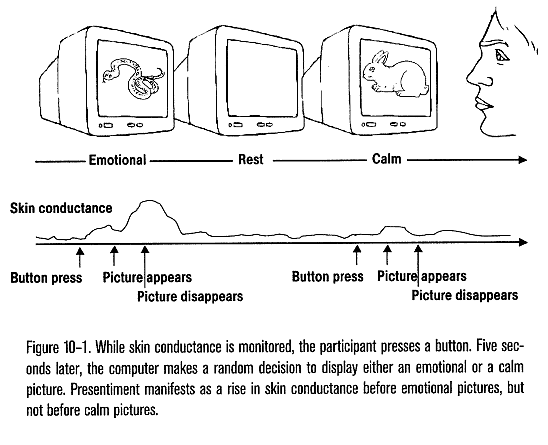

For example to do this you could set up a computer to present different images that different people would likely respond to by having specific emotional reactions. You could then have someone press a button that would initiate the computer to display one of these randomly generated images a few seconds after the button is pressed.

In other words if someone was going to be confronted by a certain event or presentation and their reaction to this event was pre-rendered and pre-buffered by the simulation then is it possible to pick up subtle effects in these people that could be construed as being due to commonly used data buffering techniques.

If we could measure such effects such that these measurements might even be accurate enough to allow us to predict peoples emotional reactions well before specific pre-defined emotional events actually happen then would this research be recognized as evidence that our reality appears to be making use of commonly used processing overhead reduction strategies?

Well, actually there is . . .

This is presented in Dean Radin’s book ‘Entangled Minds’ in Chapter 10 on page 161 entitled ‘Presentiment’.

Dean conducted 4 experiments measuring skin conductance to see if you could predict events before they occurred.

Participants had to press a button to initiate the random presentation of pictures that were either ’emotional’ or ‘calm’ after a 6 second delay the picture was presented. These studies showed a very significant statistical difference between the skin conductance measured before emotional pictures were presented as opposed to calm pictures.

The combined odds against chance of the 4 separate experiments conducted are 125000 to 1. The greater the emotional response the greater the skin conductance effect. The odds against chance of this correlation were 250 to 1.

Is this really evidence of ‘presentiment’ or is it possible that this is evidence of hypothetical earth simulation software buffering future reality frames for each individual?

I was in two minds over which would be more likely until I read that someone had detected the same predictable response to a future event happening when using earth worms. Which with PEOPLE watching them would have to be properly visibly rendered particularly if they were part of an experiment. Wouldn’t they?

I’ve been talking extensively with someone that in the past was a consultant advisor to people and groups putting together computer based simulations (in other words I’ve been doing my homework).

One of the things that would be done in a simulation of this complexity would be that it would ONLY be your focused attention point that would hold the attention of the simulation software. The further away ANYTHING would be from your focused engaged attention point the more and more fuzzy will be the perceptual rendering of ‘whatever’.

This is how you’d ‘render’ all perceptual and sensory information BECAUSE it keeps the potentially massive data processing and calculation overheads to a minimum.

Coincidentally, you might have noticed as you have been reading this that you have a highly detailed central area ‘spot’ with a fuzzy ‘periphery’ for EVERYTHING you look at. This is exactly, what you would expect if you needed to save on the processing required to render a fully detailed visual presentation. Coincidentally, we also have the ‘blurring’ of fine detail as your eyes and hence your attention shifts from one area to another which requires that you have to take a moment to ‘refocus’ on your new attention ‘spot’.

Coincidentally, this also means that the scale of information that you have immediate access to particularly for cross referencing and ‘joining important dots’ is also severely reduced. I say this because you have to keep moving your eyes and or the viewed material around to ‘connect’ to possibly connected information as well as of making it more difficult to ‘re-connect’ later to any connected information you are rendered as still being able to remember. If I was designing this simulation then the reduced vision spot scale handicap is something I’d very much be tempted to do to. The last thing you’d want is to have your managed people having easy visual access to a large viewing field particularly of any ‘worrying’ material ALL AT ONCE.

Let me give you another example of how our ‘attention engagement’ engagement could determine rendering resolution . . .

When you first wake up in the morning AND your attention is still orientated to the ‘inside your head’ space then as you ‘groggily’ look around WITH YOUR ATTENTION STILL PRETTY MUCH IN YOUR HEAD then everything you see will ALL BE RENDERED FUZZILY. The lack of obvious detail (reduced resolution rendering uses up way less processing capacity) can have your groggy head filling in the blanks all on its own which can (for example) have you temporarily convinced that someone is standing in the corner of your room.

It’s is ONLY when you CONSCIOUSLY decide to engage your attention on the external environment that the ‘detail’ improves that you realize that the shadow person you were sure was standing in the corner of the room was actually made up of a mish mash of different and poorly rendered things THAT ARE ALWAYS THERE.

‘IF’ we are in a simulation then rooms you regularly use will be cached in different resolutions. When you first wake up and start looking around the software will automatically use a low resolution locally cached version UNTIL you consciously engage your attention on the room at which point it will render (or pull in from local memory) a more detailed version of the room.

It should be obvious that for a simulation software system that has to absolutely pre-calculate everything for everybody well in advance then it will also ABSOLUTELY KNOW in advance when you will apply more ENGAGED ATTENTION to any area of your surroundings. It will then absolutely KNOW many quantum clock ticks IN ADVANCE which resolution it must use for each area of each 3D perceptual frame it renders to prevent anyone becoming suspicious.

To save on processing then it is also likely that rooms you spend a lot of time in will very likely even cache your own memory and habits associated with that room. You will then do things automatically like open the cutlery drawer without looking at it or even apparently thinking about it when you are wanting to grab a spoon to stir your morning coffee with.

For example ‘IF’ you THINK of something in a specific room AND that memory is wrongly automatically associated with that room then you may find yourself forgetting that memory as you move to another room that DOESN’T have that information tagged to itself. Going back to the room where you originally had that memory would then very likely result in that memory being re-loaded when you enter the original room environment and unbelievably you’d find that what you couldn’t remember elsewhere you now can.

Saved habitual behaviours for a specific room will mean that (for example) ‘IF’ you change some aspect of a well used room then it’s likely that you’ll find yourself automatically opening the wrong drawer as you reach for a spoon AND that it will take ages for you to adjust to any new changes in a previously well used room.

The ‘oddities’ that I describe above have actually been noted by researchers whom strangely never mention ‘simulation processing overhead artefacts’ as a possible explanation for these oddities.

What ELSE would perhaps be an ‘obvious’ outcome of the simulation WAITING for you to put your engaged, focused attention on something before you ‘render’ it?

Lets have a THINK . . . . well, in an entirely made up fake reality the more detail you zoom in on and focus upon then the more OBSERVABLY obvious the . . .

“Don’t do anything until the viewer applies direct conscious attention to ‘whatever’ they are looking at” effect will become.

The smaller the scale of consciously observed investigation (engaged attention) the greater the likelihood that this bizarreness will become more and more noticeable.

In other words, the smaller the detail an observer puts their focused, engaged attention on then the more obvious it will be that this ‘wait for the observer to CONSCIOUSLY OBSERVE before you do anything’ easily deducible computer processing saving strategy will become OBSERVABLY noticeable.

‘IF’ we are in a simulation and EVERYTHING is PERCEPTUAL then the end result of zooming in and trying to consciously engage your attention on finer and finer details of the ‘made up, pretend assumed to be fundamental particles of reality’ will have the simulated as conscious, attention engaged viewer getting to a point where it will become obvious to even simulated as professionally trained observers that the ACTUAL rendering of the fundamental perceptual unit changes are not actually initiated until a viewers engaged attention is consciously applied to view them.

With something this stupidly obvious then as a simulation designer what strategies would you use to prevent your simulated researchers from REALLY realizing the significance of this?

‘IF’ you reading this ARE an academic and or scientist then ‘IF’ you were our hypothetical simulations designer then how would you MANAGE YOURSELF as you read these pages? As a simulation designer what awareness and cognitive management strategies would you implement to make sure you yourself would dismiss what are my very rational and very reasoned and logical presentations here? What individual management strategies would you use to make sure that an academic or scientist reading this wouldn’t be able to think well enough to objectively and or IMPARTIALLY evaluate EVERYTHING presented on these pages?

I have read some papers where some researchers HAVE ACTUALLY managed to suggest ‘artificial reality’ for the fundamental particle quirkiness BUT once again no one takes much notice AND of course no one has actually seriously looked for MACRO SCALE evidence that we are in some sort of artificial reality never mind that no one has even been allowed to engage any extended APPLIED THINKING ATTENTION such that no one has apparently managed to figure out any of the easily deduced observable and impossible to hide differences that would be EXPECTED ‘IF’ we are in a simulated reality that is a PROJECT. Confirmation bias being the most obvious.

It is a FACT that there are loads of physically observable effects which you’d EXPECT if various buffering and memory caching and processing reduction techniques were being applied to out reality here . . .

As one of the rare COMPETENT, likely designated as an OFFICIAL researcher by the simulation whom is ‘worryingly’ seriously trying to get a handle on some of the bizarreness’s we have presented with here, then is Dean Radin subjected to harassments by our very own easily observed Agents Smiths whom are of course another easily deduced as being a seriously likely possibility in a simulation and particularly one that is specifically attempting to get away with rendering self aware, supposedly free thinking people?

Strangely, the harassers do make a big effort to hound Dean which of course is a good indication that he’s taking worryingly decent research lines (Dean Radin’s blog is here).

This page and the last were a strategic diversion, I’d be embarrassed if I thought that anyone would imagine that my THINKING efforts and scale of THINKING on these subjects were all confined to just one little cubby hole topic area . . .

The next page will revert to discussing realistic slow down technological advances possibilities as well as of presenting an abundance of evidence that this strategy is also operational . . .

Click the right >> link below for the next page in this series . .

Filed under Are we living in a Simulation Definitions & Basic Information, Do we live in an Earth Simulation Evidence, Pages related to Prof Bostroms Simulation Argument, The Origins of the New Age Population